Day 124 MIT Sloan Fellows Class 2023, CSAIL Thesis defence2, Harni Suresh - How to prevent unintentional consequence from AI -

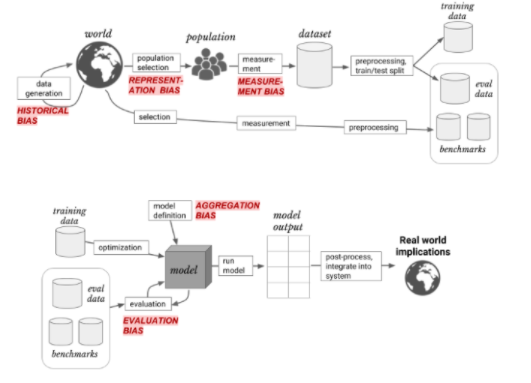

A framework for understanding sources of harm throughout the Machine Learning Life Cycle

As I introduced several times, I am really interested in data quality and its impact on AI.

Academic article: A Framework for Understanding Sources of Harm throughout the Machine Learning Life Cycle

https://arxiv.org/pdf/1901.10002.pdf

Harini beautifully mentioned about potential biases impacting on AI and causing fatal consequences. We have many pitfalls between data collection and realworld application.

- Historical bias in data generation

- Representation bias in data selection

- Measurement bias in data measurement

- Evaluation bias in model evaluation

- Aggregation bias in modeling process

https://twitter.com/harini824/status/1376176722031312902

As Harni mentioned, ML tends to be separated from all the data generation systems and people behind the data. However, we should always think about them together in order to avoid biased consequences.

we can't divorce "an algorithm" or "the data" from the systems & people that created it, and those who will use & be affected by it -- it's about power y'all! bumping this thread of a few papers/books I like that delve deeper into this: